Technology for a Better World

IdeAmigos: Human-Orchestrated Multi-agent Tool for Supporting End-to-End Brainstorming

In preparation to DIS 26: ACM Designing Interactive Systems

Hajun Kim, Jeongeon Park

Research Focus:

This study investigates the effectiveness of 'user-led orchestration', a method where a user actively collaborates with a team of independent AI agents. We introduce IdeAmigos, a multi-agent tool designed to support the entire brainstorming process—from divergence and refinement to convergence to test this concept. The core of this orchestration method is enabling the user to strategically steer the collaboration by directly tuning individual agent attributes and guiding the global conversational flow.

System of IdeAmigos

While many multi-agent systems focus on automation, an approach that leaves little room for leveraging unique human strengths, IdeAmigos is designed to allow users to orchestrate real-time interactions with multiple agents. Its core components are:

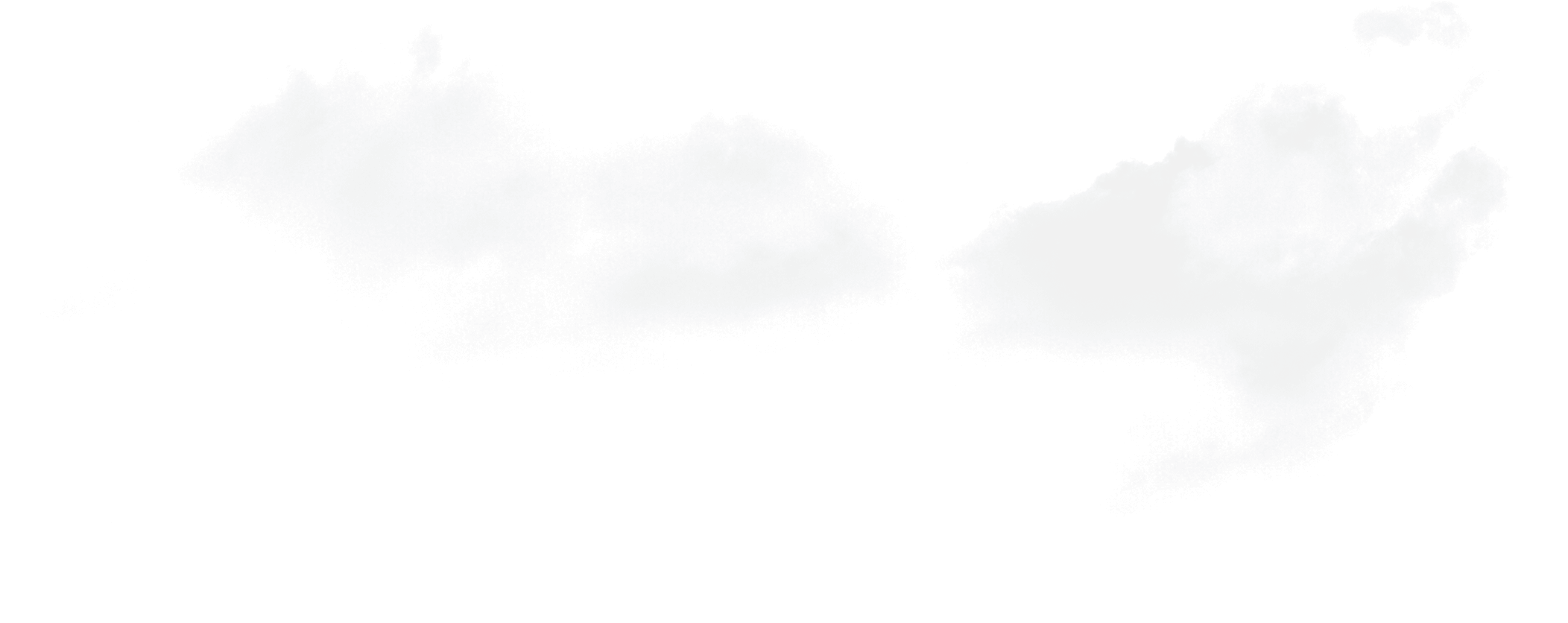

- Interface: The system is divided into three main parts: (a) the Conversational Space for user-agent dialogue , (b) the Interactive Tuning panel for controlling agent behavior , and (c) the Idea Space for managing ideas.

- Orchestration (Interaction Tuning): Users conduct orchestration via the 'Interactive Tuning' panel.

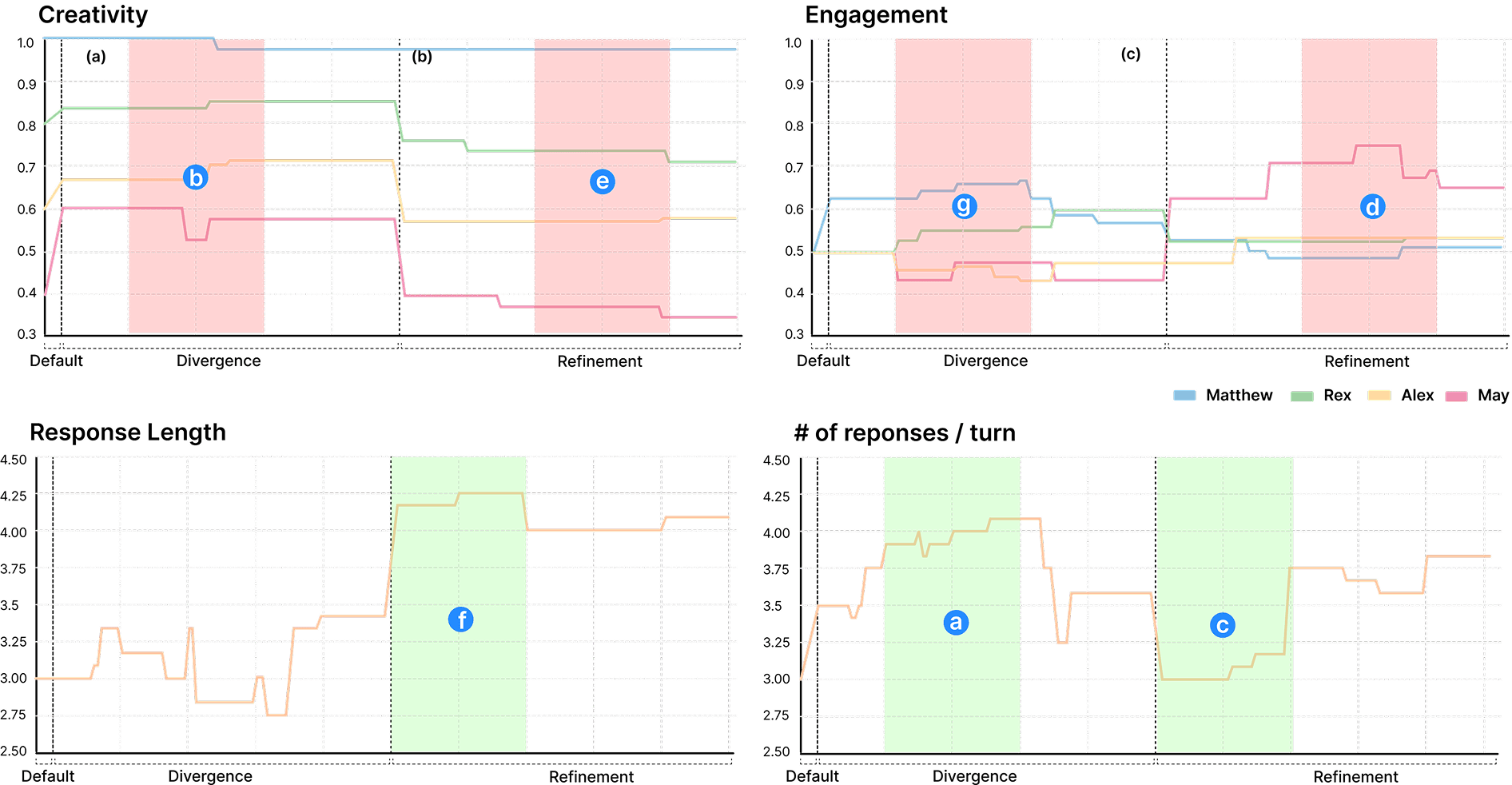

- Individual Agent Tuning: Users can adjust sliders for each agent's Creativity (to modulate response variability) and Engagement (to control participation frequency).

- Group Flow Tuning: Users can also control Response Length and the # of reponses / turn (number of agent responses per user input) for all agents simultaneously, managing the conversation's pace and density.

- Idea Management (Idea Space): Ideas proposed by both the user and the agents are automatically captured and saved as sticky notes in the Idea Space. Users can trace the full evolution of an idea, as each note retains its development history (e.g., how it was combined or expanded).

In practice, a user collaborates with agents with diverse personas through an ongoing dialogue in the Conversational Space. Throughout this conversation, the user can steer the ideation by adjusting tuning sliders, contributing their own thoughts, or by selecting and combining ideas from the Idea Space for further refinement.

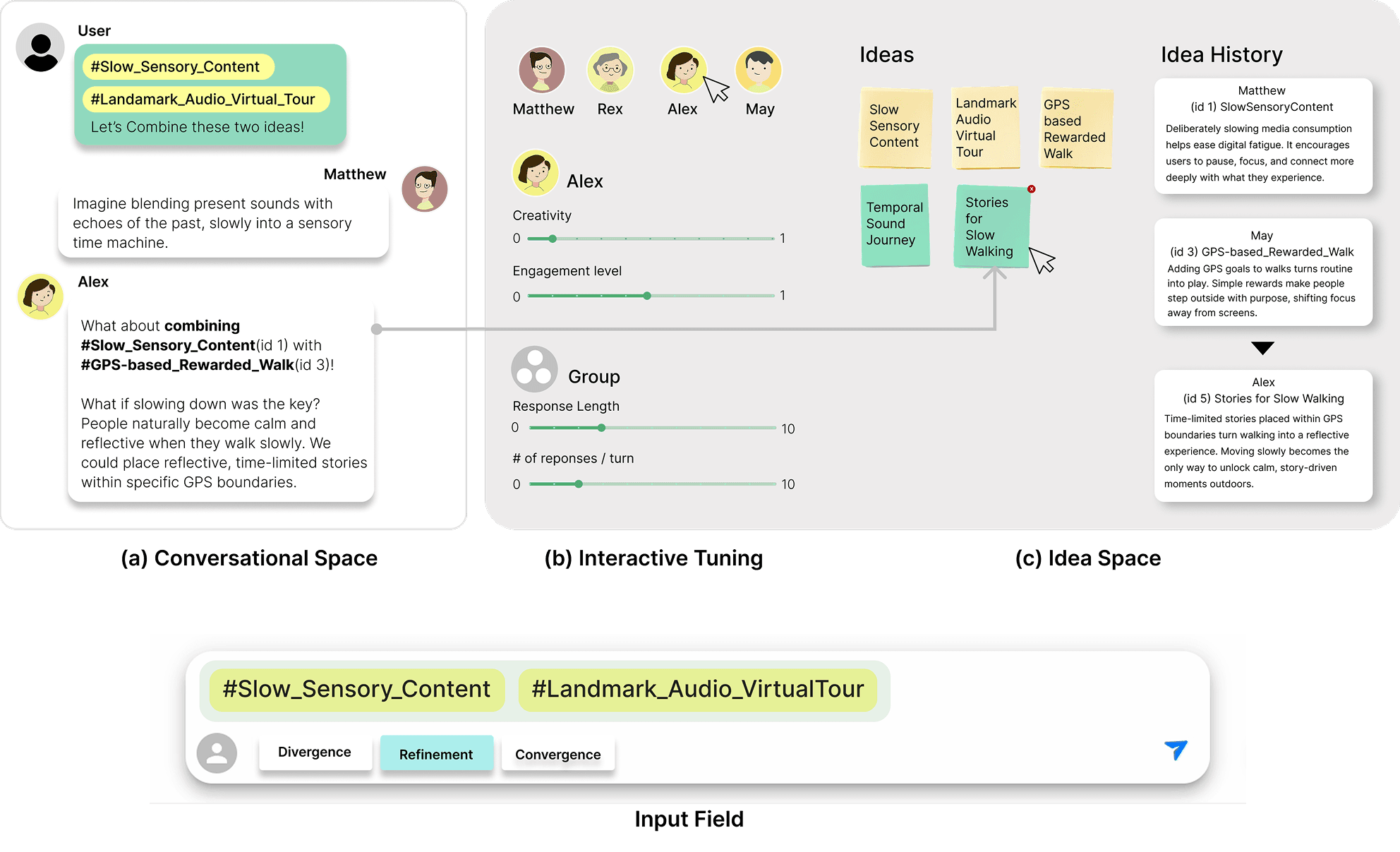

Agent Persona Template: Each agent has an independent persona (e.g., 'Matthew' the Radical Innovator, 'May' the Practical Realist), creating a complementary team needed for robust deliberation. This consistency is maintained by a template comprised of: (a) a Shared Message History, (b) Persona-Specific Instructions (defining their core persona and dynamic role for each phase), and (c) Common Instructions (outlining shared objectives and rules).

Responder Selection Algorithm: When a user sets the number of responses, the system uses a decentralized mechanism to select the next speaking agent. This algorithm integrates (a) Agent Mutual Evaluation (based on their distinct persona perspectives), (b) Participation Balancing Penalty (to prevent one agent from dominating), and (c) Human-Guided Scoring (derived from the user's 'Engagement' slider setting, acting as the primary influence).

Experiment

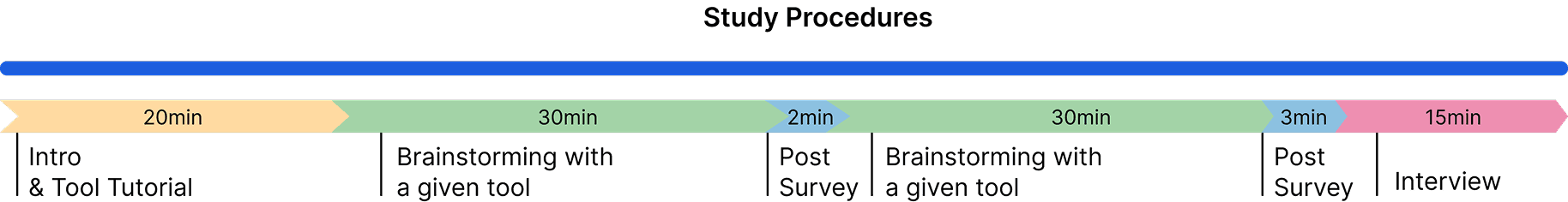

A user study was conducted with 12 UX design students.

Task Goal: In each session, participants performed a 30-minute brainstorming task. The goal was to generate ideas (divergence), then refine and combine them (refinement), and finally select their top five ideas (convergence).

Methodology: The study used a 'within-subjects' design. This means each participant completed the task twice, using two different conditions:

- IdeAmigos: A multi-agent system (4 agents).

- Baseline: A single-agent system (1 agent) with an identical interface. Two different, open-ended topics (e.g., "reducing digital fatigue") were used. The order of conditions and topics was counterbalanced across participants to minimize learning effects.

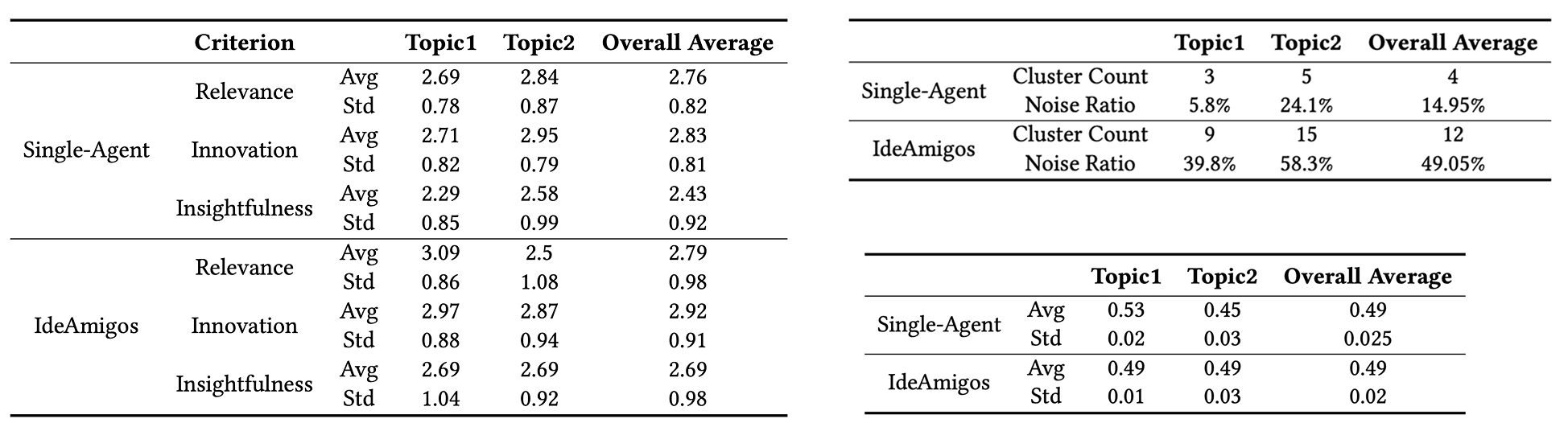

Metrics: Researchers measured outcomes like idea quality (rated by experts for innovation, relevance, insightfulness), idea diversity (using semantic clustering), originality, and users' subjective satisfaction via post-task surveys.

Key Results

- Idea Quality & Diversity: IdeAmigos produced higher-quality ideas compared to the single-agent baseline. Notably, the top 3 highest-rated ideas for both topics were all generated using IdeAmigos. It also generated a broader and more diverse range of ideas, producing more semantic clusters and outliers.

- Idea Homogeneity: Despite greater within-session diversity, IdeAmigos showed comparable inter-user homogeneity to the baseline, revealing the inherent limitation of shared base models in fostering originality across users.

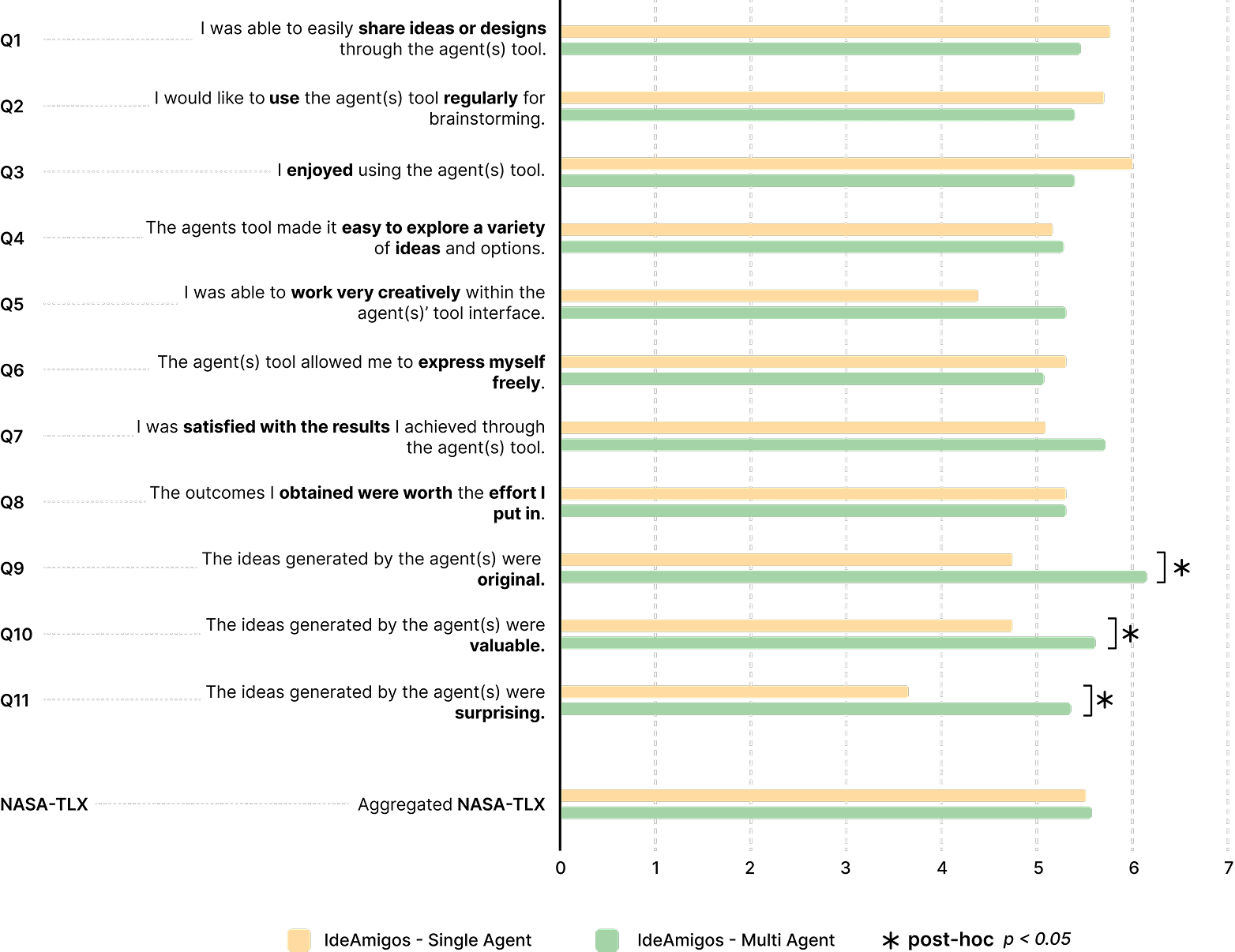

- User Perception: Participants rated the ideas from IdeAmigos as statistically significantly more original (p=0.013), valuable (p=0.047), and surprising (p=0.018) than those from the baseline.

- Usability: Despite the better ideas, participants reported lower usability satisfaction with IdeAmigos. They cited that managing multiple conversations and reading more text increased fatigue and split their attention.

User Strategies (Orchestration)

Participants actively orchestrated the agents using specific strategies:

- Phase-Based Tuning: Users adapted the controls based on the brainstorming phase.

- Divergence: They increased the # of reponses / turn to "Listen Broadly" and quickly gather a wide range of perspectives.

- Refinement: They increased the Response Length to "Listen Deeply" and get more detailed, in-depth feedback on specific ideas.

- Role-Based Selection: Users dynamically selected agents based on their needs. They increased the 'Engagement' of the creative agent (Matthew) during divergence and the practical agent (May) during refinement.

- Adopting a Facilitator Role: Many users shifted from being idea generators to acting as 'facilitators'. They focused on directing the agents, identifying good ideas, and combining agent-generated content rather than contributing their own.

Key Findings

- Model Homogeneity: All agents were built on the same base LLM, which we suggest contributed to the high homogeneity of ideas across users . Future work should explore assigning different base models or fine-tuning strategies to each agent to enhance true divergence.

- Revisiting Social Presence and User Bias: This work calls for a fundamental reexamination of social presence and user bias in multi-agent contexts. We observed that biases studied in single-agent collaboration (like anchoring effects and social loafing ) reemerge in new and novel forms in a multi-agent setting. The very act of agents interacting with each other led users to perceive them as a 'team,' creating new cognitive biases. Future work must investigate how these emerging social dynamics shape user perception and reasoning, and explore how to design interfaces that preserve critical thinking.

- Interaction Load: Participants reported cognitive load from managing multiple text-based conversations. We see an opportunity to explore multimodal interfaces, such as using TTS or avatars, to make the interaction more accessible and less mentally taxing.